Next: 14.1 The Block Store Up: Open Data Structures (in Previous: 13.4 Discussion and Exercises Contents

Throughout this book, we have been using the

![]() -bit word-RAM model

of computation defined in Section 1.3. An implicit assumption of

this model is that our computer has a large enough random access memory

to store all the data in the data structure. In some situations, this

assumption is not valid. There exist collections of data so big that

there is no computer with a memory large enough to store all the data.

In such cases, the application must resort to storing the data on some

external storage medium such as a hard disk, a solid state disk, or even

a network file server (which has its own external storage).

-bit word-RAM model

of computation defined in Section 1.3. An implicit assumption of

this model is that our computer has a large enough random access memory

to store all the data in the data structure. In some situations, this

assumption is not valid. There exist collections of data so big that

there is no computer with a memory large enough to store all the data.

In such cases, the application must resort to storing the data on some

external storage medium such as a hard disk, a solid state disk, or even

a network file server (which has its own external storage).

Accessing an item from the external storage is extremely slow. The hard disk attached to the computer on which this book is being written has an average access time of 19ms and the solid state drive attached to this computer has an average access time of 0.3ms. In contrast, the random access memory in this computer has an average access time of less than 0.000113ms. It is more than 2500 times faster to access RAM than the solid state drive and more than 160000 times faster to access RAM than the hard drive.

These speeds are fairly typical; accessing a random byte from RAM is thousands of times faster than accessing a random byte from a hard disk or solid-state drive. Access time, however, does not tell the whole story. When we access a byte from a hard disk or solid state disk, an entire block of the disk is read. Each of the drives attached to this computer has a block size of 4096; each time we read one byte, the drive gives us a block containing 4096 bytes. If we organize our data structure carefully, this means that each disk access could yield 4096 bytes that are helpful in completing whatever operation we are doing.

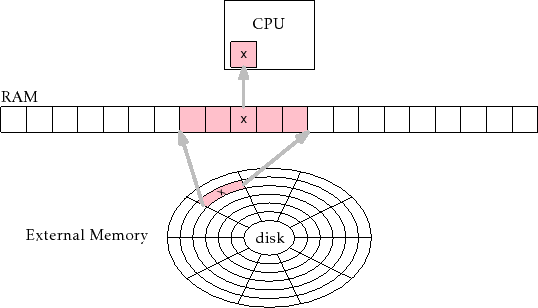

This is the idea behind the external memory model of computation,

illustrated schematically in Figure 14.1. In this model, the computer

has access to a large external memory where all the data resides.

This memory is divided into memory blocks each containing ![]() words. The computer also has a limited internal memory on which it can

perform computations. Transferring a block between internal memory and

external memory takes constant time. Computations performed within the

internal memory are free; they take no time at all. The fact

that internal memory computations are free may seem a bit strange,

but this is really just to emphasize the fact that external memory is

so much slower than RAM.

words. The computer also has a limited internal memory on which it can

perform computations. Transferring a block between internal memory and

external memory takes constant time. Computations performed within the

internal memory are free; they take no time at all. The fact

that internal memory computations are free may seem a bit strange,

but this is really just to emphasize the fact that external memory is

so much slower than RAM.

|

In the full-blown external memory model, the size of the internal

memory is also a parameter. However, for the data structures described

in this chapter, it is sufficient to have an internal memory of size

![]() . That is, the memory needs to be capable of storing

a constant number of blocks and a recursion stack of height

. That is, the memory needs to be capable of storing

a constant number of blocks and a recursion stack of height

![]() . In most cases, the

. In most cases, the ![]() term dominates the memory requirement.

For example, even with the relatively small value

term dominates the memory requirement.

For example, even with the relatively small value ![]() ,

,

![]() for all

for all

![]() . In decimal,

. In decimal,

![]() for any

for any

![]() .

.