Most of the data structures described in this chapter are folklore. They

can be found in implementations dating back over 30 years. For example,

implementations of stacks, queues, and deques which generalize easily

to the ArrayStack, ArrayQueue and ArrayDeque structures described

here are discussed by Knuth [39, Section 2.2.2].

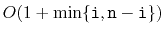

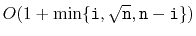

Brodnik et al. [10] seem to have been the first to describe

the RootishArrayStack and prove a  lower-bound like that

in Section 2.6.2. They also present a different structure

that uses a more sophisticated choice of block sizes in order to avoid

computing square roots in the

lower-bound like that

in Section 2.6.2. They also present a different structure

that uses a more sophisticated choice of block sizes in order to avoid

computing square roots in the

method. With their scheme, the

block containing

method. With their scheme, the

block containing

is block

is block

, which is just

the index of the leading 1 bit in the binary representation of

, which is just

the index of the leading 1 bit in the binary representation of

.

Some computer architectures provide an instruction for computing the

index of the leading 1-bit in an integer.

.

Some computer architectures provide an instruction for computing the

index of the leading 1-bit in an integer.

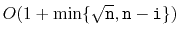

A structure related to the RootishArrayStack is the  -level

tiered-vector of Goodrich and Kloss [30]. This structure

supports

-level

tiered-vector of Goodrich and Kloss [30]. This structure

supports

and

and

in constant time and

in constant time and

and

and

in

in

time. These running times are similar

to what can be achieved with the more careful implementation of a

RootishArrayStack discussed in Exercise 2.9.

time. These running times are similar

to what can be achieved with the more careful implementation of a

RootishArrayStack discussed in Exercise 2.9.

Exercise 2..1

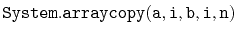

The

List method

inserts all elements of the

Collection

into the list at position

. (The

method is a special

case where

.) Explain why, for the data structures

in this chapter, it is not efficient to implement

by

repeated calls to

. Design and implement a more efficient

implementation.

Exercise 2..2

Design and implement a

RandomQueue. This is an implementation

of the

Queue interface in which the

operation removes an

element that is chosen uniformly at random among all the elements in

the queue. The

and

operations in a

RandomQueue

should take constant time.

Exercise 2..3

Design and implement a

Treque (triple-ended queue). This is a

List

implementation in which

and

run in constant time

and

and

run in time

With this running time, modifications are fast if they are near either

end or near the middle of the list.

Exercise 2..4

Implement a method

that ``rotates'' a

List so that

list item

becomes list item

. When run on

an

ArrayDeque, or a

DualArrayDeque,

should run in

.

Exercise 2..5

Modify the

ArrayDeque implementation so that the shifting

done by

,

, and

is done using

.

Exercise 2..6

Modify the

ArrayDeque implementation so that it does not use the

operator (which is expensive on some systems). Instead, it

should make use of the fact that, if

is a power of 2,

then

. (Here,

is the bitwise-and

operator.)

Exercise 2..7

Design and implement a variant of

ArrayDeque that does not do any

modular arithmetic at all. Instead, all the data sits in a consecutive

block, in order, inside an array. When the data overruns the beginning

or the end of this array, a modified

operation is performed.

The amortized cost of all operations should be the same as in an

ArrayDeque.

Hint: Making this work is really all about how a

operation

is performed. You would like

operation

is performed. You would like

to put the data structure

into a state where the data cannot run off either end until at least

to put the data structure

into a state where the data cannot run off either end until at least

operations have been performed.

operations have been performed.

Test the performance of your implementation against the ArrayDeque.

Optimize your implementation (by using

)

and see if you can get it to outperform the ArrayDeque implementation.

)

and see if you can get it to outperform the ArrayDeque implementation.

Exercise 2..8

Design and implement a version of a

RootishArrayStack that has

only

wasted space, but that can perform

and

operations in

time.

Exercise 2..9

Design and implement a version of a

RootishArrayStack that has

only

wasted space, but that can perform

and

operations in

time. (For an idea on how to do this, see Section

3.3.)

Exercise 2..10

Design and implement a version of a

RootishArrayStack that has

only

wasted space, but that can perform

and

operations in

time.

(See Section

3.3 for ideas on how to achieve this.)

opendatastructures.org

![]() lower-bound like that

in Section 2.6.2. They also present a different structure

that uses a more sophisticated choice of block sizes in order to avoid

computing square roots in the

lower-bound like that

in Section 2.6.2. They also present a different structure

that uses a more sophisticated choice of block sizes in order to avoid

computing square roots in the

![]() method. With their scheme, the

block containing

method. With their scheme, the

block containing

![]() is block

is block

![]() , which is just

the index of the leading 1 bit in the binary representation of

, which is just

the index of the leading 1 bit in the binary representation of

![]() .

Some computer architectures provide an instruction for computing the

index of the leading 1-bit in an integer.

.

Some computer architectures provide an instruction for computing the

index of the leading 1-bit in an integer.

![]() -level

tiered-vector of Goodrich and Kloss [30]. This structure

supports

-level

tiered-vector of Goodrich and Kloss [30]. This structure

supports

![]() and

and

![]() in constant time and

in constant time and

![]() and

and

![]() in

in

![]() time. These running times are similar

to what can be achieved with the more careful implementation of a

RootishArrayStack discussed in Exercise 2.9.

time. These running times are similar

to what can be achieved with the more careful implementation of a

RootishArrayStack discussed in Exercise 2.9.

![]() operation

is performed. You would like

operation

is performed. You would like

![]() to put the data structure

into a state where the data cannot run off either end until at least

to put the data structure

into a state where the data cannot run off either end until at least

![]() operations have been performed.

operations have been performed.

![]() )

and see if you can get it to outperform the ArrayDeque implementation.

)

and see if you can get it to outperform the ArrayDeque implementation.