Next: 10. Heaps Up: 9. Red-Black Trees Previous: 9.3 Summary Contents

Red-black trees were first introduced by Guibas and Sedgewick [32]. Despite their high implementation complexity they are found in some of the most commonly used libraries and applications. Most algorithms and data structures discuss some variant of red-black trees.

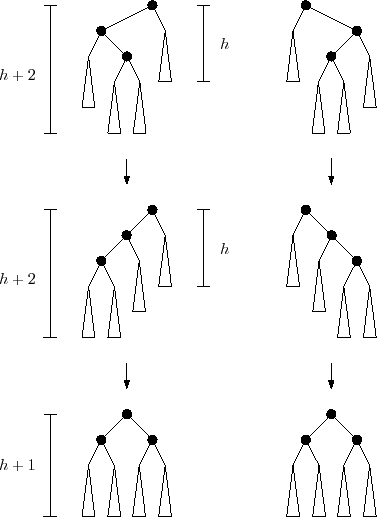

Andersson [4] describes a left-leaning version of balanced trees

that are similar to red-black trees but have the additional constraint

that any node has at most one red child. This implies that these trees

simulate 2-3 trees rather than 2-4 trees. They are significantly simpler,

though, than the

![]() structure presented in this chapter.

structure presented in this chapter.

Sedgewick [55] describes at least two versions of left-leaning red-black trees. These use recursion along with a simulation of top-down splitting and merging in 2-4 trees. The combination of these two techniques makes for particularly short and elegant code.

A related, and older, data structure is the AVL tree [2].

AVL trees are height-balanced: At each node ![]() , the height

of the subtree rooted at

, the height

of the subtree rooted at

![]() and the subtree rooted at

and the subtree rooted at

![]() differ by at most one. It follows immediately that, if

differ by at most one. It follows immediately that, if ![]() is the

minimum number of leaves in a tree of height

is the

minimum number of leaves in a tree of height ![]() , then

, then ![]() obeys the

Fibonacci recurrence

obeys the

Fibonacci recurrence

|

Andersson's variant of red-black trees, Sedgewick's variant of red-black

trees, and AVL trees are all simpler to implement than the

![]() structure defined here. Unfortunately, none of them can guarantee that

the amortized time spent rebalancing is

structure defined here. Unfortunately, none of them can guarantee that

the amortized time spent rebalancing is ![]() per update. In particular,

there is no analogue of Theorem 9.2 for those structures.

per update. In particular,

there is no analogue of Theorem 9.2 for those structures.